Ben MannTech Lead, Product Engineering

Ben, Tech Lead for Product Engineering at Anthropic, has played a pivotal role in scaling AI products with a deep focus on safety and alignment. From leaving OpenAI to co-founding Anthropic’s culture of mission driven growth, his journey is one of strategic leadership and foresight in a rapidly evolving industry.

Founder Stats

- AI, Technology

- Started 2021

- $1M+/mo

- 50+ team

- USA

About Ben Mann

Ben Mann leads product engineering at Anthropic, focusing on aligning AI to be helpful, harmless, and honest. Formerly part of GPT-3’s architecture team at OpenAI, he left to prioritize safety at the frontier of AI development. His leadership philosophy blends rapid innovation with principled safeguards, making him a key figure in the global AI conversation.

Interview

August 15, 2025

Why do you think top AI researchers are getting such huge offers from companies like Meta?

It’s a sign of the times. The technology is extremely valuable, and the industry is growing fast. At Anthropic, we’ve been less affected because people are mission driven they choose between just making money or shaping the future of humanity.

How do you see these $100 million signing packages from a business perspective?

They sound huge, but compared to the value a single person can add, they’re actually cheap. If someone improves our systems by even a few percent, the revenue impact can be enormous.

Some say AI progress is plateauing. Do you agree?

No. Progress is accelerating. Model release cycles are shorter, and scaling laws still hold. People mistake rapid iteration for slower gains, but the advances are very real.

How do you personally define AGI?

I prefer the term 'transformative AI.' It’s not about matching humans at everything it’s about causing large scale societal and economic transformation. The Economic Turing Test is one way to measure that.

What impact do you think AI is already having on jobs?

It’s already here. In customer service, AI resolves over 80% of tickets without humans. In coding, our tools write most of the code, allowing smaller teams to achieve more.

How should people future proof their careers in this AI driven economy?

Learn to use the tools ambitiously. Don’t treat them like old tools push for big changes, experiment, and adapt quickly. Those who adapt fastest will thrive.

You mentioned even non technical teams benefit from AI. Can you give examples?

Yes. Our legal team uses Claude to redline documents. Finance uses it to run analyses on customers and revenue. The productivity gains are huge.

You left OpenAI to start Anthropic. Why?

We felt safety wasn’t prioritized enough there. Our founding team came from the safety group and wanted a company where safety was the top priority while staying at the frontier.

How do you balance safety and competitiveness?

They’re not opposites. Working on safety often improves capabilities. Claude’s trustworthiness and personality are direct results of our alignment research.

What is Constitutional AI and why is it important?

It’s training AI with guiding principles like human rights baked in from the start. The model critiques and improves its own outputs against these values.

Why be so public about your models’ failures?

Because transparency builds trust. Policymakers and the public deserve the straight talk, not sugarcoating.

Some critics say this is fear-mongering. How do you respond?

We avoid hyping unsafe tech even when it could grab headlines. Our focus is ensuring benefits outweigh risks by addressing problems early.

When do you expect superintelligence?

Forecasts like the AI 2027 report point to 2028 as a 50% probability. The exponential growth in compute, algorithms, and infrastructure makes it realistic.

What’s the biggest bottleneck to improving AI models today?

Compute chips and data centers plus breakthroughs in algorithms and efficiency. All three drive progress.

How do you personally handle the weight of working on safe superintelligence?

I treat 'busy' as the normal state. It's a marathon, not a sprint. Being surrounded by mission driven people helps.

You’ve held many roles at Anthropic. Which was your favorite?

Starting the Labs now Frontiers team. It bridges research and products, creating tools like Claude Code and Model Context Protocol.

For people who want to contribute to AI safety but aren’t researchers, what’s your advice?

You don’t need to be an AI scientist. Roles in product, finance, operations all help drive the mission forward.

Table Of Questions

Video Interviews with Ben Mann

Anthropic co-founder: AGI predictions, leaving OpenAI, what keeps him up at night | Ben Mann

Cite This Interview

Use this interview in your research, article, or academic work

Related Interviews

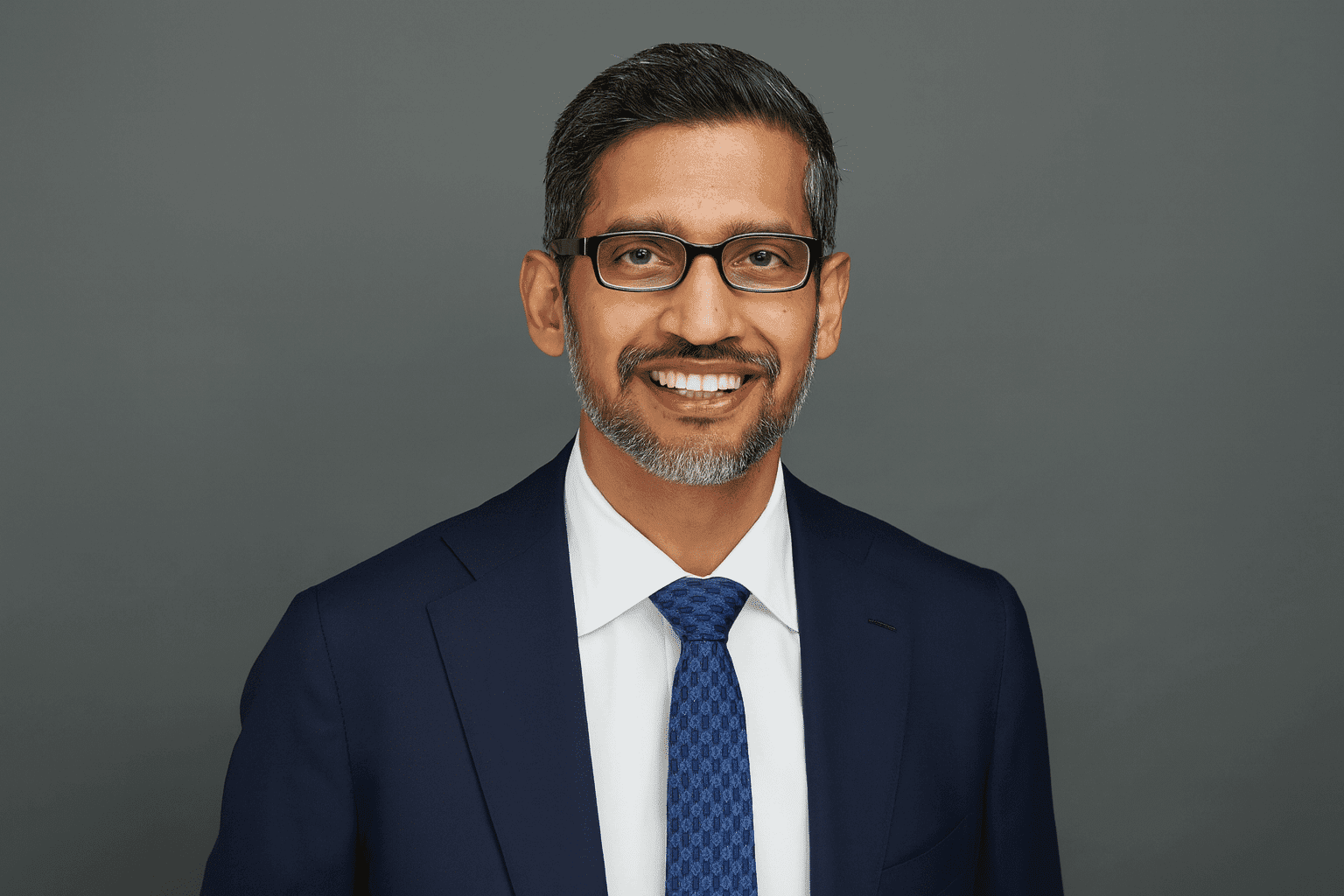

Sundar Pichai

CEO, Google at Google

Sundar Pichai is the engineer-turned-CEO leading Google through what he calls an extraordinary AI inflection point, balancing trillion-dollar infrastructure bets with safety, climate, and long-term societal impact.

Mark Shapiro

President and CEO at Toronto Blue Jays

How I'm building a sustainable winning culture in Major League Baseball through people-first leadership and data-driven decision making.

Harry Halpin

CEO and Co-founder at Nym Technologies

How I'm building privacy technology that protects users from surveillance while making it accessible to everyone, not just tech experts.